BLOG

How do we build generative AI we can trust?

Understanding how foundational models are different and making the right decisions across Architecture, Security and Responsible AI.

5-minute read

June 1, 2023

BLOG

Understanding how foundational models are different and making the right decisions across Architecture, Security and Responsible AI.

5-minute read

June 1, 2023

I realized this with generative AI (Gen AI) a few weeks ago. Thinking aloud, I’d asked myself a question about needing to finish a summary on how digital technologies have impacted the workforce.

Overhearing this, and impatient for me to be finished, my nine-year-old daughter said, “Why don’t you just use ChatGPT?” Then she went on to explain that I could use it to get a first draft that I could then edit it to suit my needs.

I was shocked. She not only knew what ChatGPT was but also understood its strengths and weaknesses.

In that moment I realized just how much this technology has captured the world’s attention. And how fast. ChatGPT has done for large language models (LLMs), and the larger class of foundation models that power Gen AI, what no academic paper could ever hope to.

I shouldn’t have been surprised. I’ve been excited about the potential of foundation models for a long time now.

This goes right back to work we did with Stanford University starting in 2019. Their work on transformers had already radically changed Google Translate—shrinking 500,000 lines of code to just 500 with the implementation of TensorFlow.

Our early work together focused on making it more efficient for domain experts to label data needed to train machine learning models and today applies to fine-tune foundation models. For example, it may take an expensive expert an hour to decide whether a given loan should be approved or rejected—that is to “label” an individual data point.

I wrote a blog post more recently, in 2021, about my building excitement for this new data-centric AI. And Stanford Professor Chris Re went on to commercialize the ability to build and fine-tune foundation models with companies like Snorkel and SambaNova.

But it was my daughter’s comment that really brought to life how pervasive the impact of generative had become. It confirmed what I already suspected: this technology was going to be a very big deal.

It’s no surprise that so many businesses are excited. Accenture’s research has found that Gen AI will reinvent work and impact every kind of company in all kinds of different ways.

But for the technology to be enterprise ready, we need to be able to trust it. And that raises all sorts of different questions.

For example, companies wonder how much they can trust the accuracy and relevance of a model’s outputs. They’re also asking whether these models are safe and secure to use.

And then there’s the broader question of social responsibility. Businesses need to be comfortable, knowing that they’re using the technology in the right way and not exposing themselves to ethical and legal risks.

The good news is there are solutions to these questions.

It begins with understanding how foundation models are fundamentally different from what came before it.

Previously, getting better results from AI was typically a question of “more”—you created more models for more use cases. And you needed more data scientists to build them.

Foundation models change that. Because they’re trained with internet-scale data, a single model can be adapted and fine-tuned for any number of different use cases.

So now it’s about less. You need far fewer models. The emphasis shifts from model building to model fine-tuning and usage. And from data science to more domain expertise and data engineering.

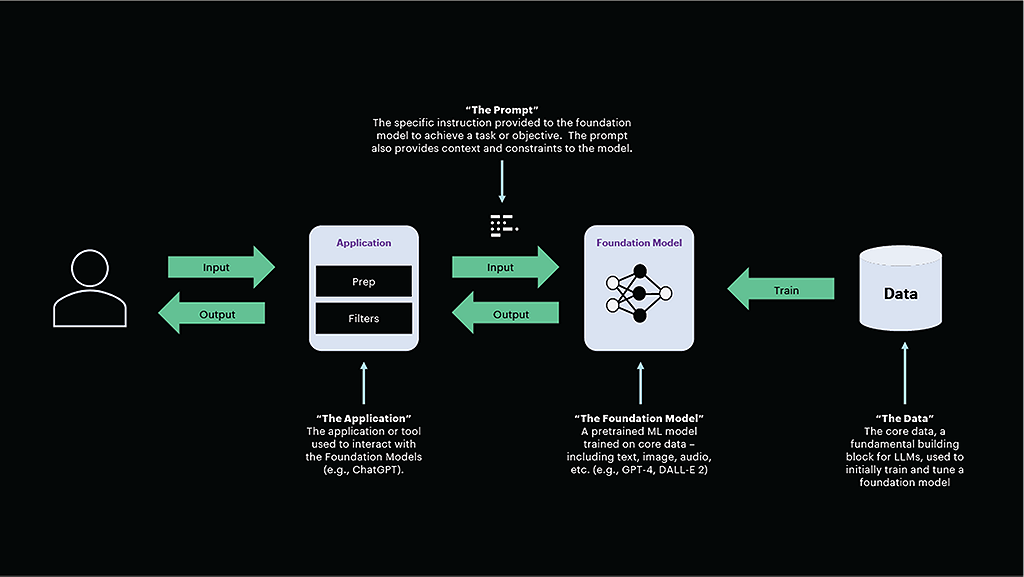

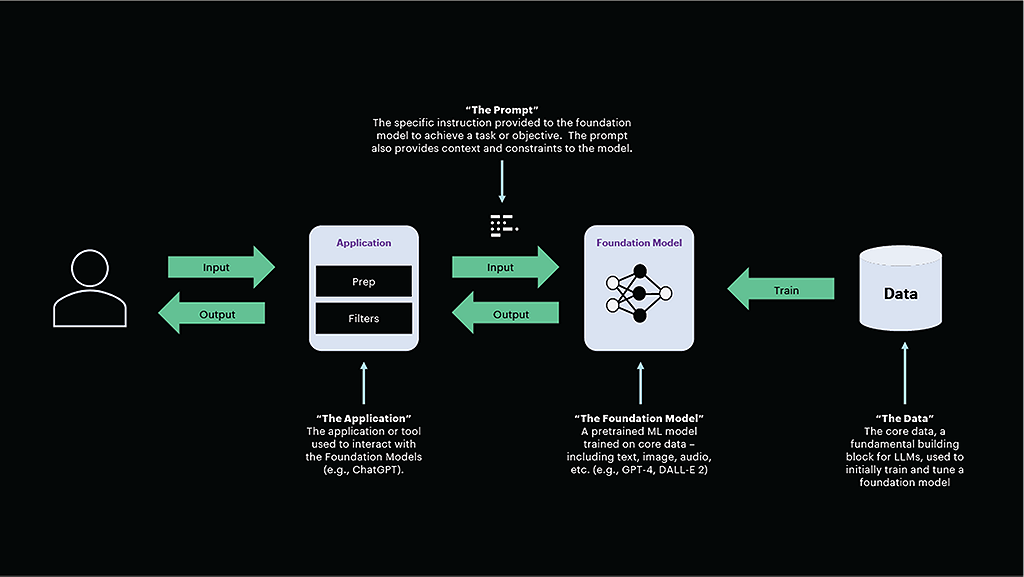

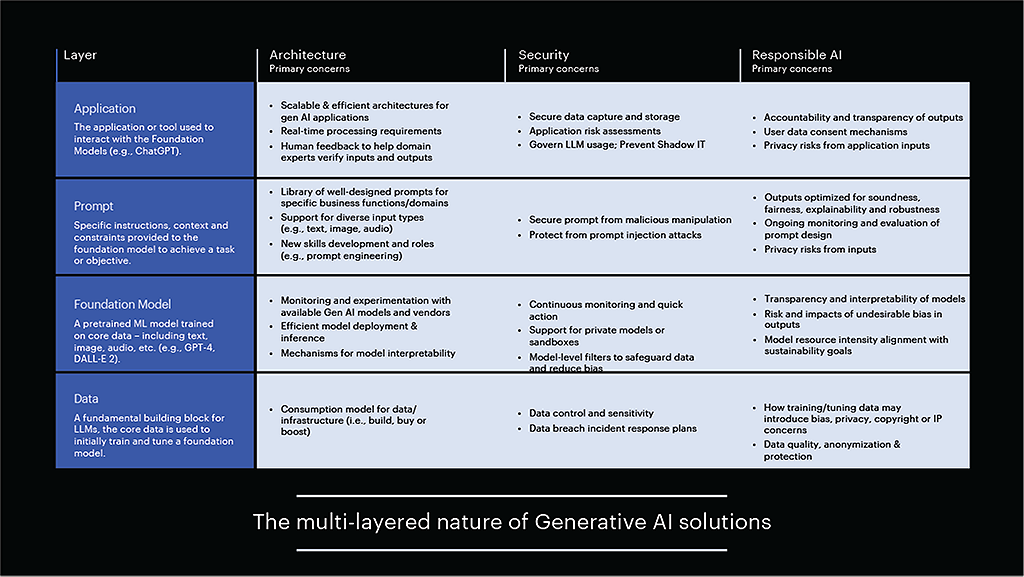

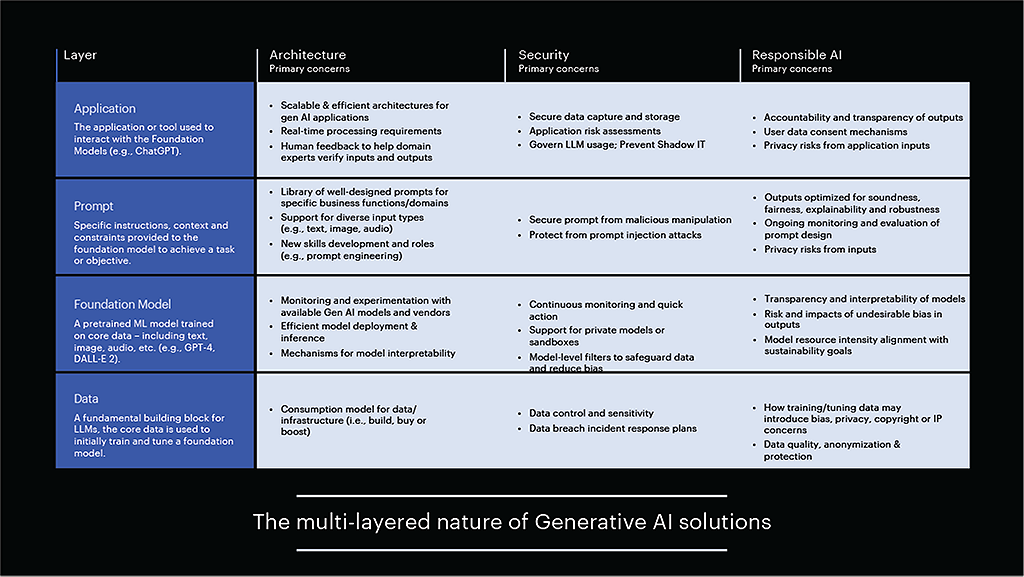

To tap into foundation models, companies need to understand four layers: the data, the model itself, the prompt request and the application.

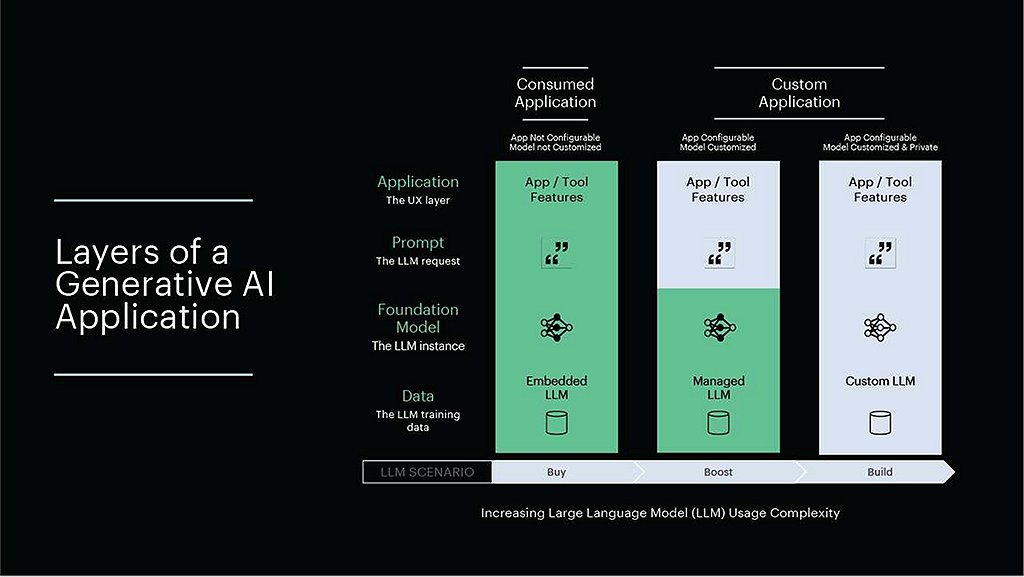

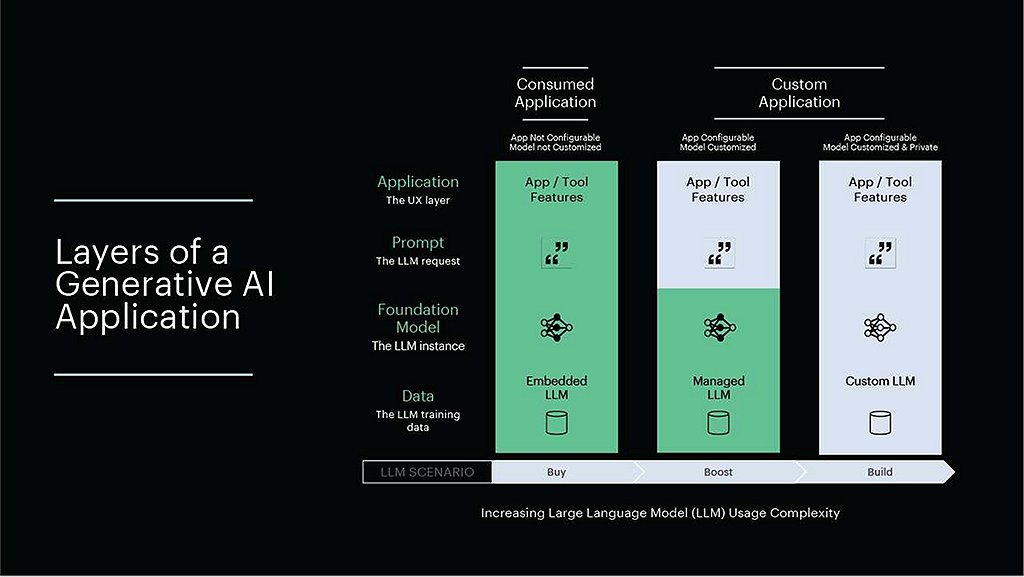

The way a company consumes and controls a foundation model across these layers will determine how it approaches questions of trust. We can think of this as a choice between whether to buy, boost or build.

At one extreme, you can buy an end-to-end service from a provider that spans all four layers, similar to how we consume software-as-a-service on cloud. What you gain in speed and simplicity, you lose in control and customization.

At the other extreme, you can build your own model, trained with your own data, that’s totally under your control. Some companies will supply the entire corpus of data needed to build a custom model, but most who go this route will start with an open source LLM, adding their own domain data to an existing corpus of data. But this big a commitment won’t be suitable for every business in the beginning.

A middle way is to boost an existing foundation model by fine-tuning it with your own data and building your own prompt and application layers on top. With fine-tuning, the company can provide fewer examples of domain data (as compared to pretraining), gaining some immediate customization.

How to choose a model depends on what you need to control.

A good example is looking at how Gen AI is being used for software code development. In our own experience, we’ve found that Gen AI powered tools resulted in not only better developer efficiency but also greater job satisfaction. Developers liked how Gen AI automated the mundane tasks they didn’t enjoy doing anyway, leaving them free to focus on more meaningful work.

There are end-to-end solutions like Github Copilot and Amazon CodeWhisperer that are ready to buy and use. And there are others like Tabnine that offer the ability to customize a private model, by boosting it with your own data, that can be run in its own environment. And then there’s the option to build with open source like Starcoder to create your own custom model.

These layers and consumption patterns then feed into a series of key business decisions that are directly related to trust.

First: What architecture best ensures model outputs are relevant, reliable and usable? Depending on the consumption model they choose, companies will need to manage new components like knowledge graphs, vector databases and prompt engineering libraries. They may even need to host and manage their own infrastructure. As well as the applications and interfaces that business users will use to access and provide feedback on the models.

Second: How do we ensure models are secure and safe to use? This brings up considerations like IP and data leakage, safeguarding the sandboxes, protecting against prompt injection attacks and how to manage the risk of shadow IT exploding AI across the business without the proper oversight.

Third: How do we make sure Gen AI is responsible AI? This is especially important given how accessible and democratized the technology has become. Here, it’s about updating guidelines and training, ensuring everyone understands the risks, identifying bias in training data and paying attention to evolving legal issues around IP infringement.

There’s lots more to say about these areas, and we’ll be diving into the detail of each one in subsequent blog posts.

Ultimately, it’s about answering one central question: How do we build generative AI we can trust?

That’s going to be critical in ensuring employees, customers and businesses—all of us—can actually get value from this technology.

Together—and perhaps with some help from my nine-year-old—we’re going to find the answer.